There are arguments for and against disabling low data rates, and it’s my hope, that after reading this blog, you will understand why disabling lower legacy data rates may help your WLAN perform better.

My only basic (mandatory) rate is typically 12M. All rates below 12M are disabled. All rates above 12M are enabled/optional.

MCS Rates

There are three kinds of frames in WiFi: 1) Management, 2) Control, and 3) Data. Legacy data rates (1, 2, 5.5, 11, 6, 9, 12, 18, 24, 36, 48, & 54Mbps) or MCS rates may be used for Data frames, Management frames, or Control frames carried in an A-MPDU. (802.11-2012, sections 9.7.5.6 and 9.7.6.1) Other control frames have special rules for transmission data rates. I suggest not disabling any MCS rates because the negative impacts, if any, are unknown (untested) to date.

2.4GHz

In 2.4GHz, your first goal is to get rid of non-WiFi interference sources. Your second goal is to get rid of 11b client devices. Use of 11b clients necessitates use of low (non-OFDM) data rates, which forces the use and ripple of protection mechanisms (e.g. RTS/CTS and CTS-to-Self). See this white paper.

Using 12M as your minimum basic rate (MBR) will disconnect all 11b devices and will prevent protection ripple, both of which will add capacity to your network through increased airtime capacity.

Before you über WIFi geeks get started down that road of, “But the PHY header is always sent at the minimum supported PHY rate for the band!“, it’s important to remember that the clients have to decode the MAC header in order to understand the frame type, and that includes beacons and probe responses. The MAC header is part of the PSDU/MPDU and is sent at higher/configurable rates. For further info, see Andrew’s blog on the topic.

Management Frame Airtime (Overhead)

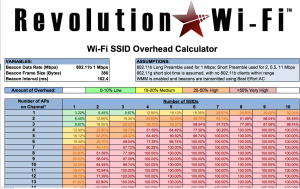

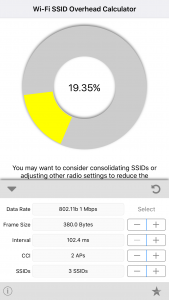

Many frames may or must use the MBR, including beacons, probe requests, probe responses, and broadcast/multicast traffic (which is more common with mDNS/Bonjour and IPv6). If you haven’t checked out Andrew Von Nagy’s SSID Overhead Calculator spreadsheet or iPhone app, just do it (right now). 🙂

Note that these tools only calculate airtime consumption for beacons. It’s very common to see 2-3x more Probe Requests and Probe Responses on a channel than Beacons, so multiply his number by double or triple for real-world management/discovery traffic overhead. This tool will quickly show you how fast management/discovery traffic will eat your airtime. It’s almost shocking.

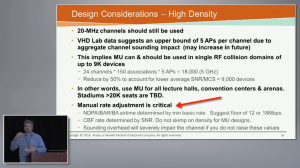

Just wait until channel sounding (which uses the MBR) gets ugly with MU-MIMO! See below from the esteemed Chuck Lukaszewski at Aruba Networks, who gave a masterful presentation on MU-MIMO at the Feb 2016 WLPC conference. Chuck suggested MBR of 12M (which works well with Apple devices when the network is properly designed) or 18M (which may have compatibility problems with some clients). I have had a good experience with 12M in the field in a variety of scenarios, and 24M may be necessary for airtime conservation in VHD environments.

To dive a little deeper into the “why not 18M?” question, note that mandatory support for 6, 12, and 24Mbps data rates is specified in the 802.11-2012 (Section 18.2.2.3) standard. This was originally specified at a time when radio quality was quite poor (802.11a) as compared to today’s radios. 802.11a/g radios are quickly on their way out, and in most networks comprise a very small percentage of the overall client population. With newer, better quality radios, clients no longer need the lower data rates for stable connectivity. Some (very few) 5G capable client drivers were written to accept only required support of 6, 12, and 24M rates (“Basic Rates”) – or they wouldn’t connect. Most 5G capable client drivers have been written to accept any rate or set of data rates that an AP is announcing as Basic. Further still, there are a few 5G capable client drivers out there that will only accept 6, 12, or 24M, but nothing else. This is why 18M, when used as the only basic rate, may not be a good idea – as it will cause clients to not connect to the BSS.

The pertinent time slots, related to minimum basic rates, in Chuck’s presentation include:

24:25 – 26:11

1:09:07 – 1:10:34

1:12:28 – 1:13:33

Data Frame Airtime (Performance)

In order to achieve a higher SNR, the client will need to be closer to the AP or the AP’s output power will need to be higher (not recommended due to CCI). Having clients closer to APs means that they will use higher data rates and use less airtime for data transmissions. Less airtime utilization means higher capacity per BSS.

The typical recommended indoor AP coverage from most vendors is around 2,500-3,000. At that range, it’s important to keep output power low to avoid CCI. Keeping power low further necessitates keeping clients close to APs in order to maintain high SNR and MCS rates. Further, use of low data rates, even with Airtime Fairness enabled (if supported and working properly), consumes a BSS’s airtime quickly. Any argument for enabling lower legacy data rates is an argument for allowing clients to use those data rates, and that’s the last thing you want. Without Airtime Fairness, the negative impact to a BSS is overwhelming – essentially giving each member of the BSS the same capacity as the least capable client at any given instant.

One school of thought is that if frames cannot be decoded, then those frames will become noise/interference for other WiFi stations. Modifying the MBR has a near-zero effect on CCI caused by the AP since the PLCP preamble and header are always transmitted at the lowest supported rate of the band (e.g. 6Mbps for 5GHz and 1Mbps at 2.4GHz). However, clients extend the interference radius of a BSS and the farther they are allowed to roam away from the AP (while remaining connected), the more CCI they cause. Clients don’t have to be able to receive the MPDU in order to detect the PLCP preamble and header and to back off appropriately.

Modulation Types

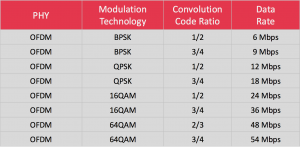

The more sophisticated the modulation type, the higher the RSSI and SNR you need to decode it. Said backwards (for clarity), the less sophisticated the modulation type, the lower the RSSI and SNR you need to decode it. BPSK and QPSK are the two lowest-order modulation types in use in WiFi, and they are more easily decoded, which raises airtime consumption at longer range.

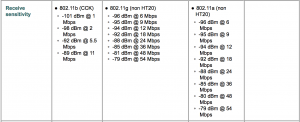

See the AP radio sensitivity chart below. Whether you’re talking about a client or AP, lower orders of modulation can be decoded at less RSSI and SNR.

The hope is that a client device will scan and attempt to roam when it reaches an RSSI (or SNR) threshold set by its manufacturer, but client device manufacturers rarely publish their receive sensitivity or roaming algorithm specifications. Therefore, one of our design goals should be that all users will have an optimum user experience given the device and applications that they’re using.

It’s interesting that even an MBR as high as 12M is still achievable at low RSSI/SNR levels. Clients should always roam before they get anywhere near the low data rates we’re proposing to disable. For example, iOS devices (v8 and later) trigger roam scanning at -70dBm and roam when there is an 8-12dB difference between the current and new APs.

Sticky Clients \ Roaming

Sticky clients just suck. If a client device has poor SNR (either unidirectional or bi-directional), it will use unsophisticated modulation, and so will use low MCS/data rates. Equally bad, sticky clients significantly extend the interference range of a BSS, and CCI drives down network capacity. By raising the MBR to 12M, it limits the data rate that the AP can use for transmissions.

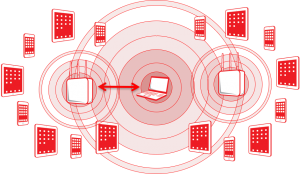

In the graphic below, you will see that the laptop in the center is associated with the AP to the left. Both APs in the graphic are on the same channel. While the APs cannot hear each other, they (and many of their other clients) can hear the laptop. In this situation, the client effectively conjoins the two BSSs and causes CCI. For this reason, it’s important for clients not to roam too far from their associated AP.

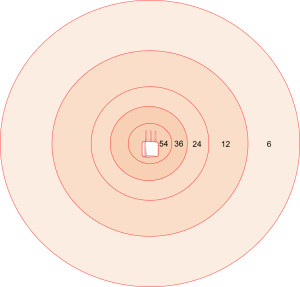

As shown in the graphic below, per-data-rate coverage areas get larger as the client moves away from the AP because lower order modulation types are easier to decode. This means that lower legacy data rates will have larger coverage areas (larger concentric rings in the graphic below) than higher legacy data rates.

Poor client drivers are partially to blame for stickiness, and poor network design is the rest of the story. Good enterprise design often means use of fairly low AP power to prevent CCI. (Before you WiFi nerds start throwing out corner cases, I used the word “often” on purpose.) Turning the AP power down means that clients won’t be able to hear the AP as far, and so will be forced to roam sooner.

If client drivers are taking into consideration successful or failed frame transmissions at the current MCS/data-rate, and not just RSSI (or SNR), then they may be more inclined to be sticky with the use of low data rates. I know of several clients that take more than RSSI into consideration when roaming, and as a sanity check, I asked multiple architect-level engineers to review this blog before publishing. They too know of several client devices whose roaming algorithm takes into consideration more than just RSSI.

For the sake of argument, let’s look at some clients that use RSSI as their primary metric. Here is Apple’s iOS9 roaming reference. It’s pretty clear from this reference, and from having months of hands-on time testing roaming for Apple iOS-based devices, that they do exceptionally well using 12 or 24Mbps as their only MBR, with all lower data rates disabled.

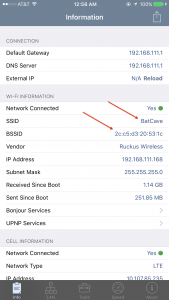

A good tool to validate that the mobile client is roaming is Net Analyzer. They have a “Lite” version that will work as well. The same tool is available both from the Apple App store (for iOS devices) and the Google Play store (for Android devices).

Notice that with this tool, you can see both the SSID and BSSID that the client is associated with. As you roam from place to place, you will see your BSSID change. It has worked like a champ for me, for months.

By having a higher MBR (e.g. 12Mbps), clients are influenced to make better choices about which AP to associate with. Because they can decode the beacons and MPDUs at a shorter range, they can decode beacons and probe responses from a smaller set of APs in the environment. This approach helps clients associate with the AP that is best able to serve them rather than APs that may be marginally acceptable.

Design

When you’re designing the network, the AP’s MBR should be considered alongside its placement, output power, and channel because it will determine effective cell sizing. Using walls (e.g. putting APs in rooms) to your advantage will block much of the signal beyond your desirable RSSI level.

If you’re trying to piece-meal transition your network from an elderly “coverage” design to a more modern “coverage/capacity” design by rip and replace without a new design, it’s advisable to assess whether 12M would be better for you.

Conclusion

Keeping legacy data rates enabled might have been a good thing for the “coverage” designs of pre-2009, but given today’s landscape of high-density, highly-mobile, mobile-device-centric WiFi, that almost always requires high performance, keeping clients on the optimal AP, at high data rates, will yield the best user experience – and user experience is king. Before you say, “my network doesn’t require high performance”, ask your end users whether they prefer a fast WiFi network or a slow one.

I have one final thought. If you insist on supporting low data rates, do it in the 2.4GHz band, where the user experience is often poor anyway, as enabling low legacy data rates is only going to further impair your user experience.

Good overview of the issue. Thank you, Devin.

But I still want to connect with my 802.11b Orinoco Classic Gold PCMCIA network cards

When performing a pre-deployment (AP on a stick) passive RF survey, does the MBR configured on the AP affect the results of the survey? For example if we do a survey with MBR of 12Mbps and one with 36Mbps, is the cell edge for the target RSSI going to be different? Also, do we need to survey using the target MBR value?

I have heard from various colleagues that the MBR doesn’t really matter as the survey software just checks the RSSI of the detected beacon’s preamble. If that is the case, how does the software recognize the SSID string which should be included in the frame body of the beacon’s MPDU ? Don’t we need to decode the MPDU to do that?

Hi Theo,

Thanks for stopping by.

The answer is YES.

Your colleagues shouldn’t confuse CCI range (preamble detection/decoding) with decoding the MCS used for MMPDUs. The MAC header has to be decoded by the survey software in order to read the addresses, and the Beacon frame body has to be decoded in order for the survey software to read the SSID.

For this reason, the the MBR does affect the survey. Don’t forget though, that with pre-deployment surveys, you should survey until the AP (on a stick) goes away, in three dimensions. This gives you both your useful range and your CCI range.

Hope this helps!

Devinator

Thank you for clarifying this for me Devin.

In this case, do we survey (APOAS) using a typical MBR of 12Mbps and then adjust during the optimization phase or survey directly with a higher value i.e. 18, 24Mpbs?

Theo

Survey directly with a higher value of 12Mbps.

Good information. Currently have mbr set @ 12 for 2.4 and 5. I don’t have survey software available to me, do you suggest moving both to 24 without being able to do a survey ? Anything else that can be used to verify its not too high ?

Hi JP,

A survey should always be performed as a validation step.

A survey will not tell you whether or not to move from 12M to 24M. The two issues that we are trying to control using MBRs are:

1) Airtime consumption

2) Client connectivity range

If you have a protocol analyzer, or even a good scanner like WiFi Explorer, look at your airtime consumption. If it’s low, the 12M is fine. If it’s high, then moving to 24M will help to some smallish degree, but will also help with interference range of each BSS.

Good write-up, however I have a comment: you state that if you want to enable lower MBRs to do so on 2.4GHz.

In my mind, that doesn’t make much sense when it comes to dual-band clients as you want to coax these devices onto 5GHz. With that in mind, would it not make more sense to more aggressively trim the MBR in 2.4GHz – say to 24 or even 54 (design dependent), while leaving 5GHz to something like 12 or 24?

Thanks.

Hi Jeremy,

Thanks for dropping by. You should lower MBRs on BOTH bands. Take a look at the data rate configurations on the very first graphics in this blog post for my recommended configuration settings.

The only time you should consider MBRs higher than 12Mbps is in VHD environments like stadiums where 24Mbps would be acceptable in most situations. I personally still stick to 12Mbps in those environments because the differential, from an airtime savings perspective, is minimal between 12M and 24M, but the reliability of client connectivity COULD be impacted by moving higher than 24M in some cases.

Hope this helps!

Devin

Great Blog.

We intend to allow 802.11b at 11 Mbps only, anything below won’t be accepted. From 802.11a/g on, we go for 12 Mbps as MBR.

“Raising the MBR to 12M will require higher RSSI/SNR, which means that the PSDU (MPDU) will only be decodable at a shorter range.”

How does it mater that data field is harder to decode if PLCP preamble and header can be easily decoded?

“By raising the MBR to 12M, it limits the data rate that both the AP and the clients can use for MAC layer operations.”

In one network APs use 12M MBR but clients use data rates like 6 M. If 6M, 12M and 24M are mandatory how AP can force clients not to use those rates?

One vendor does not support MBR settings but recommends setting minimum RSSI like -70 dBm. After that clients are kicked. How does this compare to MBR? I know that beacons use still 1M or 6M but how about data frames?

Hi Tem,

Thanks for your questions.

Your questions:

1) How does it matter that data field is harder to decode if PLCP preamble and header can be easily decoded?

Answer:

Perhaps you’re thinking about the interference range caused by an AP when you ask this question. The interference range of the AP is indeed caused by the modulation type of its PLCP headers. That however, has no bearing on the interference radius of a BSS. The three things that determine the interference radius of a BSS are:

1) Position/direction of the entire set of client devices associated with the AP.

2) Output power of each client device.

3) The modulation type used for each PLCP header of *client* transmissions.

To help you understand this, suppose that the AP and Client are using +10dBm EIRP output power on Channel 36, and the Client is 4 meters away from the AP. The client’s power is +10dBm, but the AP’s power, at the client’s position, is mathematically +10dBm -47dB – 6dB -6dB = -49dBm. That means that the client’s power is 59dB higher at the client’s position than the AP’s power. That’s almost 1 million times higher power from the client…and that’s only at 4m. As the client moves further away from the AP, the difference between the two is much higher, whereby the client is always FAR louder. This means that the AP’s output power and PLCP header modulation type (and data rate) are irrelevant when considering interference radius of a BSS.

One (of many) reason that we want to configure the MBR for 12Mbps is in order to keep the clients as close as possible to the AP, yet without wrecking reliable connections. The closer the clients are to the AP, then the less interference they cause to other APs (and their clients) that are operating on the same channel. Clients cause more CCI than APs, by far. Another reason for keeping the MBR at 12Mbps is to lessen the airtime used by management traffic such as Beacons and Probe Responses.

2) If one network APs use 12M MBR but clients use data rates like 6 M. If 6M, 12M and 24M are mandatory how AP can force clients not to use those rates? One vendor does not support MBR settings but recommends setting minimum RSSI like -70 dBm. After that clients are kicked. How does this compare to MBR? I know that beacons use still 1M or 6M but how about data frames?

Answer:

You cannot stop clients from using rates when they are in State 1 (of the 802.11 connectivity state machine), called “Discovery” because they will use their lowest supported rates for management frames like Probe Requests. However, once they are associated, they are not supposed to use data rates lower than the minimum basic or minimum supported rates, and those are announced in beacons and probe response frames. So, if the AP says that 12M is the MBR, then associated clients should be using 12M and higher data rates for control, management, and data frames. If a vendor does not support configuring Basic or Supported rates, then for sure their equipment is using the lowest supported rates of each band for their Basic rates, and that will cause very high channel overhead (airtime use) and often a very poor user experience (e.g. sticky clients, unnecessary CCI, etc.). Using an RSSI value in that manner can adversely affect client user experience because client devices are supposed to make the roaming decision. If you combine a feature like that with carefully configured 802.11k and 802.11v features, then it might work OK, but APs should not just disconnect clients in that way. The uni-directional data rates used for Data frames are normally determined by SNR, but other factors may be used by a client or AP driver. Check out MCSIndex.com and http://www.revolutionwifi.net/revolutionwifi/2014/09/wi-fi-snr-to-mcs-data-rate-mapping.html These should be helpful in determining what MCS is in use for a particular client device.

Hope this helps.

Great article, specially when using Cisco WAP 371 / 571 access points (looks exactly like your two screenshots at the top). I’ve been experimenting more and more with these settings these days, mostly because we sometimes had Airplay speakers drop out or clients dropping Wifi (iphones) out of nowhere.

We use 5 and 2.4ghz both on same SSID name, but i have bandsteering disabled as i still think clients should decide to stay on 5 or not, right? I have now set 2.4Ghz Rates to 12M minimum (supported and basic), same for 5Ghz. The only thing im afraid of now is that phones might drop a bit early when they go below that 12M?